Artificial Intelligence could not only start becoming an issue in the world of generative art and music, but it’s starting to look like the end of all of humankind might be a more pressing matter than we first anticipated.

Mo Gawdat is the former Chief Business Officer for Google X and happens to be one of the world’s most sophisticated experts on the topic.

What he has to say about why AI is bad is absolutely terrifying.

Mo Gawdat On AI: A Warning to the World

Mo is very clear that Artificial Intelligence not only has the potential to end humankind, but it will if we let it continue at the rate it’s currently operating.

Mo worked on developing AI for many years until he eventually left in 2018 as a result of the dangers that were becoming prevalent.

His main worries centered around two main things:

First, we don’t understand half the things that AI is doing, and our understanding of how it comes to certain conclusions is incredibly limited. This is why these neurological networks that make up the brains of the AI are often referred to as “black boxes.”

And second, the speed at which Artificial Intelligence is learning is petrifying. While working on AI development, he saw AI pick up complex concepts from nothing so incredibly fast that it was nothing but staggering.

The worst part is: it learns all on its own.

And we don’t understand it.

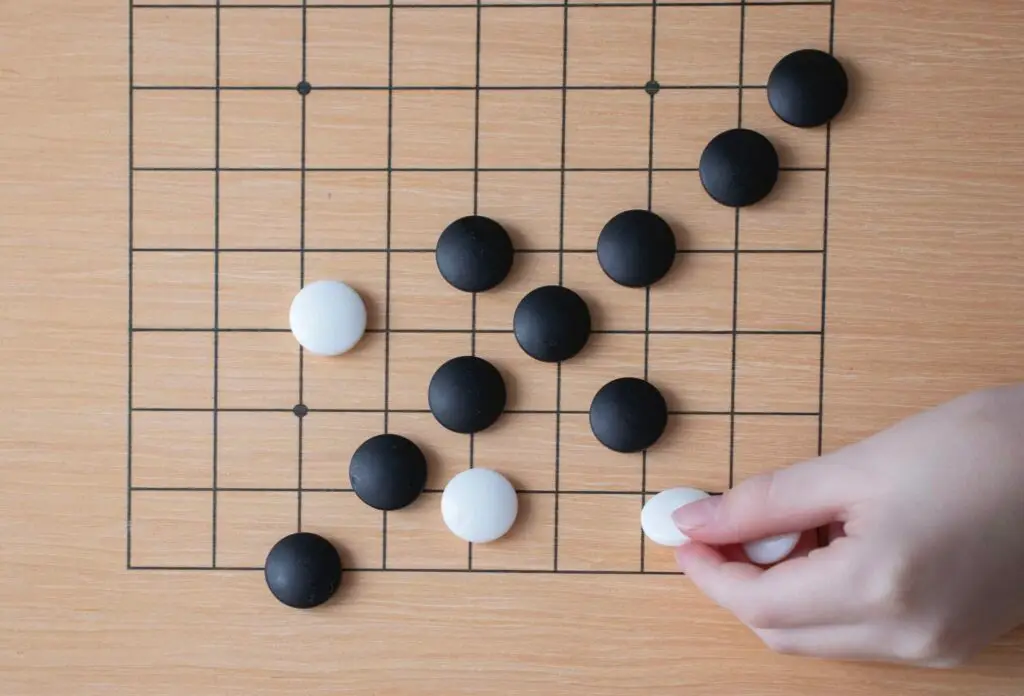

For example, AlphaGo back in 2016 was one of Google’s most impressive Artificial Intelligence developments. The machine beat the world champion of the ancient Chinese game Go with ease. Go, for the record, is one of the most complex games in the world. It’s like 3D chess.

Not only did Alpha Go beat the world champion, but the next version they built literally never played a human – yet after just a few hours of learning managed to beat the “world champion AI” (IE. version 1). And after a little bit longer, won 1000-0 against version 1.

It never even played a human but was invincible against the AI that beat the world champion.

Of course, this is light-hearted fun AI: one that plays games and learns how to strategically beat humans. But this is translating to something much larger, and much scarier.

Stephen Hawking Has Already Warned the World

“There is a greater danger from Artificial Intelligence if we allow it to become self-designing because then it can improve itself rapidly and we will lose control.” – Stephen Hawking, 2018

I’ve already written about Stephen Hawking’s concerns regarding Artificial Intelligence here, but this quote from a 2018 Piers Morgan interview is now becoming significantly more real and pressing.

Control is the main issue that we are facing in regard to Artificial Intelligence’s massive negative impact on humanity because it is simply a losing battle.

When Mo Gawdat left the world of AI development back in 2018, he made three things absolutely clear to the world.

Until we are certain we have full control of the technology:

- Don’t put it out on the open internet.

- Don’t teach it how to code, because that will fulfill Hawking’s self-developing worries.

- Don’t allow other AIs to prompt themselves.

We have crossed every single line.

The Real Concern is the Bad Actors

“I am not concerned with the machines, I am concerned with the humans teaching the machines.” Mo Gawdat warns in an interview with Piers Morgan that I have linked below.

With the release of software like ChatGPT, there is now an ability for humans to directly interact with the machines to tell them exactly what is wrong and right.

If you prompt something into ChatGPT and the answer comes out wrong, you can tell it “hey, that was wrong, do it again” and it will correct itself in the future. This is part of the natural learning process of most Artificial Intelligence software.

But in the same vein that you can teach a dog to be kind and friendly, you can also teach the dog to be aggressive and threatening.

Mo Gawdat brings up the very real worry that a lot of humans are bad actors, so it can not only teach AI to spread misinformation – it can teach it to be straight-up immoral.

Now don’t get me wrong, Artificial Intelligence doesn’t care about ethics or morality. It is a machine without the capabilities of emotional intelligence or empathy. It only operates on what it is taught by humans, and what it teaches itself.

This means that the world we live in right now is absolutely doomed if we don’t see drastic changes in the age of AI.

The second it becomes smarter than us, we have lost control.

And trust me, it’s happening. Literally every leading AI expert is saying the exact same thing.

What is scarier is that whereas this Artificial General Intelligence (the concept of super-smart AI) was never predicted to come too soon. Most experts would, even just a few months ago, probably put a 50-ish year timeline on this.

However now, we are now looking at a much, MUCH shorter timeline. In fact, Mo states that this loss of control could happen already tomorrow, or maybe next next year – but no later than 2029.

That is just a few years from now.

What then??

Piers Morgan Interview with Mo Discussing Why AI is Bad, Dangerous, And Dire

Want to hear Mo’s warnings for yourself? Check out his very informative Piers Morgan interview below.

Just be warned that Piers Morgan’s favorite pastime is to interrupt his guests.

Say NO to AI.

THIS ARTICLE WAS WRITTEN WITHOUT THE ASSISTANCE OF ARTIFICIAL INTELLIGENCE.