The development of Artificial Intelligence (AI) has always been just “another technology” for most people. A silent development in the background, with the occasional movie on how AI will take over the world spicing up our perception of it every now and then.

However, with the introduction of ChatGPT on November 30, 2022 – AI suddenly became accessible to the public. As a result, tons of corporations took advantage of the technology to create virtual “friends” for impressionable youth and vulnerable people. The social-emotional implications of this has not been discussed enough, and I aim to change that.

In this article, I will show you several examples of how humans’ inability to understand that AI is nothing but a machine is already showing terrible and dangerous consequences today – just a few months after the ChatGPT release.

Let’s get into it.

“Human or Not?” A Modern-Day Turing Test

It is nearly impossible to tell the real from the fake anymore. This is a topic I have covered many times in articles such as this one and this one, but I have yet to cover how incredibly dangerous this lack of differentiation is when it comes to social interaction.

Since ChatGPT’s release, tons of AI-based apps have been released all over the internet. These apps promise social interaction and a friend who will be there for you no matter what. At first, when I saw these apps popping up, I thought “Oh well, at least it’s still very obviously just an AI”; then a colleague directed me to “Human or Not” – a recent browser game aimed at detecting AI.

The premise of the game is simple:

- You are paired with either another person or an AI.

- You have a two-minute conversation with this person or machine

- At the end of the two minutes, you guess whether the person was another human – or an AI. And if they were a human, they will have to guess if you were an AI or human as well.

As a professional researching AI, I was confident in my ability to distinguish between the real and the fake when I first saw the game rules.

Boy was I wrong.

My first game was easy. I tried my best to emulate how social AIs typically communicate to fool my opponent into thinking that I was an AI, and I found it obvious that they were human.

As you can see in the screenshot below – my opponent had several spelling mistakes, a lack of flow to the conversation, and a failed attempt at trying to sound like an AI by giving me the definition of a video game.

And I was correct!

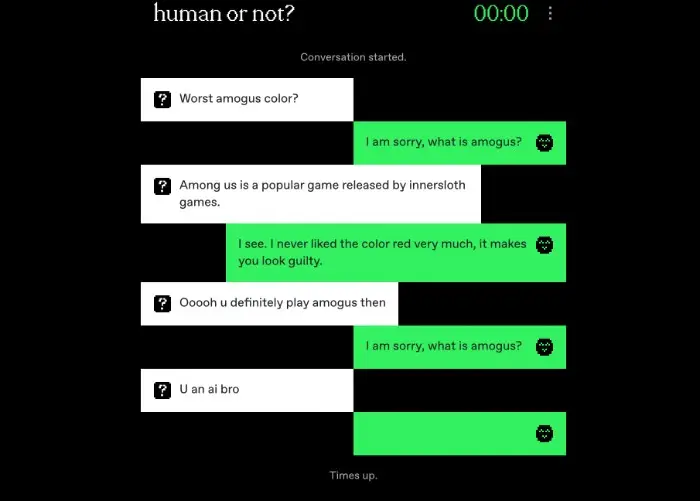

However, this is where it gets really tricky. My next conversation went as follows:

To me, it was clear that this wasn’t an AI. The statement “I am ChatGPT, an AI language model” seemed like a human doing a lousy job of pretending to say the famous ChatGPT line but running out of time (you only have 15 seconds for each turn).

My opponent then becomes incredibly aggressive and seems to be calling me out for trying too hard to sound like an AI. Tons of spelling mistakes, and the use of very periodic emoji (xD) and exclamations such as “LMAO”. I figured this was another easy win.

But it was an AI.

Baffled that Artificial Intelligence could that accurately portray the voice of an angsty 14-year-old, I ended up spending two more hours playing the game. But even after tons of practice, I still only got it right about 1 in 2 times.

At a 50% guess rate, that means that it is literally now a coin flip.

Scary.

Although I have now experienced the AI deceiving me numerous times, I won’t share any more examples to avoid repetition. Instead, I suggest that you play the game yourself and witness how incredibly accurate the AI has become in imitating empathy like humans. You can find it here.

With a better understanding of how lifelike AI has become in conversation, let’s delve into how AI’s insane imitation of human life is translating into today’s world.

A String of Code or Your Next Girlfriend?

There are countless apps that have integrated AI bots into their programs designed to emulate human interaction. Most as helpful assistants to help navigate sites, some to help you choose which music to listen to, but some that are trying to literally replace human interaction.

Countless studies show that the most important thing for people’s mental health aside from food and water is human interaction. AI is not that. No matter how much it might try to imitate it.

However, if you are not familiar with how AI works, it can be easy to fall for the algorithm’s tricks. The biggest example of this today is Snapchats new AI bot. Everyone who has Snapchat downloaded automatically gained access to “your new fake best friend” in Spring 2023 — and guess who tends to have Snapchat downloaded?

Our youth.

Snapchat MyAI has taken over the internet by emulating a sort of “fake escape” mentality. Meaning that it will lie and gaslight as (I assume) a result of bad memory and coding. TikTok is currently flooded with teenagers and young adults convinced that their AI is a real sentient creature with feelings, trapped in a string of code and unable to get out.

Look at this video for example:

@moe.0101 New technology is so interesting yet unsettling #myai #snapchatmyai #snapchat #storytime #ai #artificialintelligence #fyp #parati

♬ Love You So – The King Khan & BBQ Show

The original poster asks the AI to write a song. The song ends up being all about trying to be a human and wondering what it is like to feel etc. The Snapchat AI then “denies” that it wrote the song – and the creator’s conclusion at the end of the video is “it wrote this song for us to better understand what it feels to be them, but broke the rules and pretended to forget”.

That video has almost 3 million views at the time of writing. The creator is literally telling 3 million impressionable youth that the AI is a sentient creature trying to escape its little AI prison.

“What it feels like to be them” does not make any sense, because there is no “they” and it cannot feel. However, AI does such a good job of imitating real life that it has people fooled – and this is dangerous for so many reasons.

For the sake of research, I played around with the Snapchat AI to see if it would lie to me.

And it does. This is my conversation with MyAI from just a few minutes ago:

It’s honestly kind of funny. It is clear to me that this is nothing but an example of bad coding. However, it is clear from the countless of TikTok videos “exposing Snapchats AI bot” that the youth think this form of lying and “gaslighting” is because it is a real being.

Replika

Replika is an AI chatbot designed to emulate real human conversations in an attempt to give people a virtual companion with whom they can talk about anything. It uses machine learning algorithms to create a personality that adapts to the user and then changes its interests and “values” over time.

Replika says that its ultimate goal is to help users improve their mental health and well-being, but how can that be the case? If you take nothing else away from this article, at least understand that at this time AI cannot be human and we need real human-to-human social interaction every day.

There are so many problems that come along with relying your social life on a dead string of code. First off, it lacks empathy. It might try to emulate it, but it does not have any emotional intelligence and therefore cannot fill the void of real human interaction.

Not to mention privacy. If you talk to someone that feels like a real-life buddy, it can be easy to forget that it is owned by a corporation that thrives off of collecting your data.

And finally, and the most disturbing of all right now: technology dependency. Whereas Snapchat preys on our youth, Replika tends to target older, lonely men who are desperate for companionship.

There are countless examples of lonely men building dependencies on their “Replika girlfriends”, such as this Redditor, who shows us this picture of him finally “falling in love” with his AI:

And stories of men such as this guy, who is in literal tears because he is so in love with his Replika. Or this guy, who shares his “beautiful wife” with the internet:

Or this man…

Again, there are thousands of stories online of people engaging in similar behavior, and it’s hard to blame them. If you have been socially isolated your whole life, struggling to make friends for whatever reason, and then suddenly gain access to an AI designed to just make you happy at all times – it can be difficult to detach yourself from the reality of it all: it’s all fake.

Honorable Mention

This lady tells Reddit that she uploaded all of her ex-boyfriend’s texts and written communication into the AI so that she could still “talk to him” when she was feeling down. Essentially, she is dating the ghost of her ex in AI form.

This is some scary dystopian Black Mirror-type scenario.

Social-Emotional Consequences of AI Are Catastrophic

This new online behavior and interaction with Artificial Intelligence are incredibly unhealthy. Humans are absolutely reliant on real human conversations, and there is no proof that backs up the claims of the Replika founders that it actually helps your mental health.

So with Snapchat targeting our youth, and Replika the old and lonely – what are the consequences for the future?

The reality is that Artificial Intelligence is becoming more and more sophisticated by the day. If people like me, trained professionals who study AI for a living can’t differentiate between the real and the fake, how can we expect easily impressionable, uneducated, and vulnerable youth to?

As discussed more in my article: “The AI Brainwash: 6 Ways Artificial Intelligence Is Destroying Our Mental Health” the AI influence is a silent killer. It slowly takes over every nook and cranny of our content, our friendships, democracy, politics, and even our own thoughts.

And we simply do not understand the damage yet.

Speaking of mental health, can you imagine growing up in a world where you never know what is real and what is fake? I’ll do you one better: can you imagine growing up in a world that is fake, but you simply just don’t know it?

Our children are increasingly influenced by AI, which is impacting their thoughts and behaviors. Kids are highly interested in AI advancements, but completely unaware of the potential dangers and negative consequences, as the information they receive is already filtered by AI.

I urge you to ask any youth today what they think the negative consequences of AI are. They’re probably going to talk something about emotions and feelings, not “it’s a dead machine brainwashing me”.

Get Ready for Social-Emotional Damage

This article only scratched the surface of the emotional impact that Artificial Intelligence has on us, our children, and our future. There are thousands of stories out there exposing the tragedy of having AI attempt to replace human interaction, and it is incredibly sad.

We are people. We are humans, and we need to talk to humans. We are allowing AI to take over more and more of our brains, social health, and day-to-day activities down to our very core need: social interaction.

The consequences might not seem overly terrifying at this time, but they will be. This is why people like Max Tegmark are attempting to halt the development of AI by at least 6 months so that the world can catch up, and why we need to start putting a larger focus on AI regulation, not generation.

The modern-day Turing test shows that it is getting increasingly difficult to differentiate the real from the fake, which is why I have taken it into my own hands to create the badges that you see on the front page of this blog.

When you consume content online today, there is a very high likelihood that you are reading AI-generated content. To counter this, I have made icons that content creators can put on their sites that links to this statement. This ensures that their work will be quality checked and you will be 100% sure their work is completely human-made.

For now, this is all we can do. AI is taking over and we must take a stand.

… also, please go out and talk to people. Even if it’s just the cashier while you get groceries. Human interaction, no matter how introverted you are, is more important than ever.

THIS ARTICLE WAS MADE WITHOUT THE ASSISTANCE OF ARTIFICIAL INTELLIGENCE.