I always knew it was the case, but now my friends do as well: why AI is bad for fact checking — and let me tell you all about it.

Last week I was hanging out with some old buds from my older tech days when we started discussing the first iMac. How revolutionary it was at the time, how it was introduced into our work, and how far we have come since the tail-end of 1998.

“Tail-end of 1998? The iMac came out in early 1998″ my buddy said.

I disagreed, remembering distinctly it being Christmas time when I first saw it in person.

Well, good thing Google exists.

I said “Let’s google it” – and I kid you not, my buddy said, “let’s just ask ChatGPT.”

After a quick argument about how silly it is to ChatGPT something that you can just google, we both fact-checked in our own ways. I googled, and he asked ChatGPT.

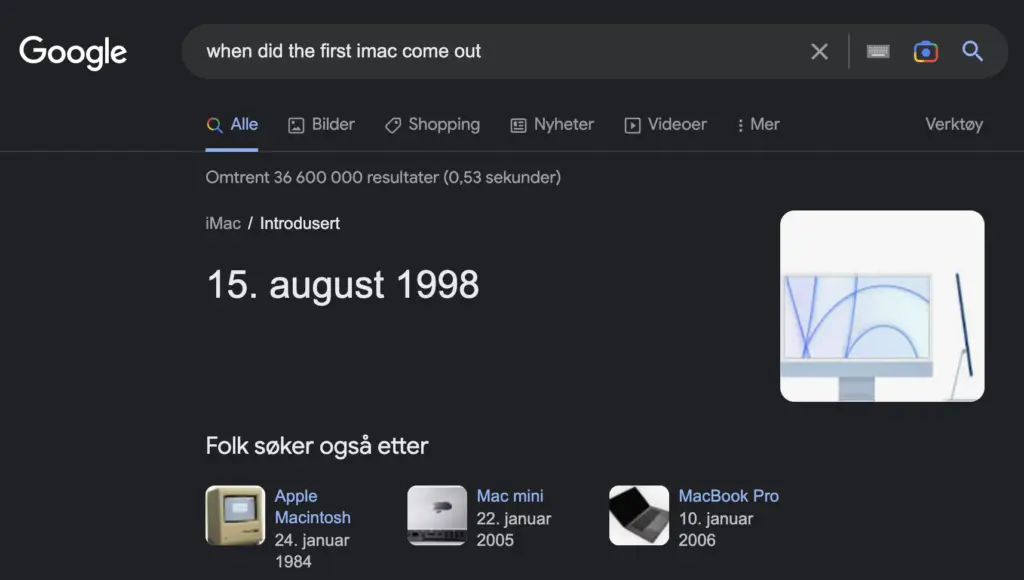

Alrighty. Looks like we were both wrong on exactly when it came out – but there is another issue at hand here: why did ChatGPT say a different date than Google?

The problem with AI language models like ChatGPT is that they literally just feed on an extremely large amount of data. It knows there’s a significance between the iMac and the date May 6th 1998, so that is the date it gave us – even though we asked for it when it came out, and not “when was it announced”

The iMac was unveiled on May 6th 1998, but did not actually come out until August of that year.

This is of course an incredibly innocent example, and my buddy and I admitted we were both wrong and moved on (it has been 25 years after all) – but a point was made: ChatGPT cannot be trusted for informational fact-checking because it cannot conceptualize as humans do.

This means that if you, like my buddy, use ChatGPT to fact-check anything – or to exercise critical thinking skills, there’s a massive chance you’re going to run into issues, errors, and misinformation.

How Does ChatGPT Get Its Information?

A massive issue with language models like ChatGPT is that people seem to treat it like an all-knowing being who has infinite wisdom and “original” thoughts. This is an incredibly dangerous frame of thought.

Think of ChatGPT as just another guy. Probably white, and under the age of 30. This guy has a photographic memory, and every day from dawn til dusk he sits down in front of his computer and reads the entire internet at an extreme speed.

He then “guesses” the rest of the internet based on algorithms he read about, and based on this gives you the answers.

This guy is, of course, a superhuman who contains a large amount of knowledge, but he is not God and cannot produce content or information that properly conceptualizes anything because he isn’t living any of his own experiences. He just sits in front of the computer all day and reads; he never builds relationships, nor empathy or emotional intelligence – he just exists, devoid of everything that should make him human.

ChatGPT Really Is Just Another White Dude

I mentioned earlier that if ChatGPT was a person, it’d be a white guy under the age of 30. But why would I say that? A dead robot cannot have a race, or gender, or age, right?

Wrong.

AI is trained on “white male data“, which makes it implicitly biased. Truth is, the internet – and history, in general, is biased towards white men. Systematic racism and gender inequality have been around for ages, and as much as OpenAI claims that they train ChatGPT to not have this bias – it still does.

This means that by using ChatGPT to aid you in any form of fact-checking, critical thinking, or other more subjective and emotionally charged topics – you are taking from a source that is inherently biased.

ChatGPT doesn’t know what it’s like to live in poverty. To be a minority in America. To be a woman in a state with strict abortion laws. To be unsure of what gender it is. It doesn’t contain any of these traits and experiences or the struggles that come with them.

Instead, it reads about it from a dataset and algorithm that is inherently biased against white men. Do you really want to hear or read about the black trans-woman experience in America from a white man?

ChatGPT Cannot Think For Itself

It is so incredibly important to remember that AI does not contain the power to have original thoughts or critical thinking skills. It cares about nothing. It does not have empathy, emotional intelligence, or regard for human life.

Imagine a world where we only consume AI-generated regurgitated content from online algorithms. It will have serious harm to our minds and mental health, which I discuss more in my article: “The AI Brainwash: 6 Ways Artificial Intelligence Is Destroying Our Mental Health“

It’s simply dystopian to believe that we can interact with machines in the same way we interact with a human, which is why I find it so disturbing that apps like Snapchat are introducing “fake best friends” like this to interact with our youth.

Clearly AI for Fact Checking Doesn’t Work: Do This Instead

If you, like my buddy, are fact-checking something – please, please use your human brain and critical thinking skills. Google it, check sources, contextualize it, and form your own opinion.

I often check this page to verify information

Artificial Intelligence taking over content creation is seriously harming the way we take in information, which is why in my opinion, I believe that all forms of media that use AI should, by law, be required to put a disclaimer on their content that clearly states AI was used in the creation of that content.

For example, Buzzfeed, which just laid off 12% of its employees to AI, should clearly state that its main content creators are now dead strings of code.

However, it doesn’t seem like that is going to happen any time soon. So as an alternative, I have created a way for content creators to easily state that their content is 100% human-made by putting any of our free No-Ai badges on their page.

The icon then links to this statement, and their content is quality checked by us to ensure that no AI was part of the process.

THIS ARTICLE WAS WRITTEN WITHOUT THE ASSISTANCE OF ARTIFICIAL INTELLIGENCE.