There are a million reasons why AI is bad for content, but this article will focus on the extremities that come with it’s wild, made-up imagination and its evil circle of self-training.

The absolute nuts influx of AI content on the internet is nothing new to most people. Since the release of large-scale language models such as ChatGPT, the entire web has been poisoned with regurgitated, lifeless, and stale articles.

Articles that half the time, if not more, contain misinformation.

Don’t worry: this isn’t an article about how AI is a machine of fake news and misinformation, I’ve already written about that here and here.

This article is about what the future is looking like because we’re seeing something that Mo Gawdat said we cannot let happen:

It is now training itself with no human intervention which is why AI is bad for content in the worst kind of way.

The Evil Loop of Made-up Bullcrap

AI language models such as ChatGPT are trained upon massive amounts of data that OpenAI has scraped from the internet.

This means that it has read a majority of the web, and based on that information will give you an answer to prompts.

The internet is already riddled with misinformation, and sometimes the AI simply isn’t able to differentiate between what is correct and what is not.

That’s not the problem though; any human could make the same mistake of googling something and simply clicking on the wrong link with the wrong information. The whole shtick of the internet is the ability to find “facts” that can back up quite literally any claim if you try hard enough after all.

I mean, that’s why conspiracy theories and flat-earthers exist.

The problem with ChatGPT is that it is incredibly prone to simply making stuff up. In fact, it does it all the damn time, yet these massive companies using mainly AI like Buzzfeed and IBM often do not fact check and just huck the information out on the internet as if it’s fact.

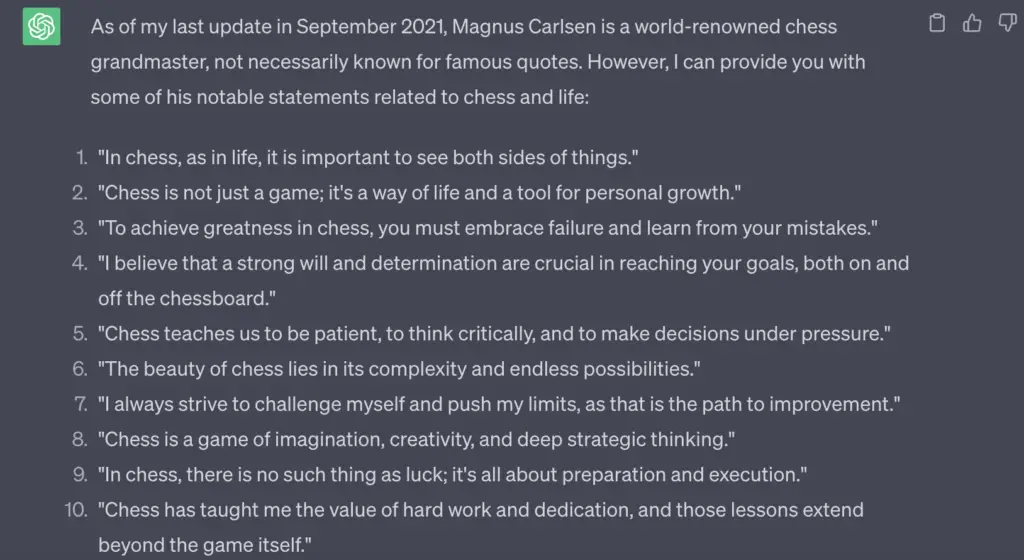

Let me demonstrate how easy and normal it is for ChatGPT to make crap up:

Magnus Carlsen is the best Chess player in the world, undoubtedly. He’s also known for being a quite goofy guy with some funny statements, such as “sometimes I just make a move and don’t really know” (this guy drew Kasparov at age 13).

So let me ask ChatGPT to give me 10 quotes or statements by Magnus Carlsen.

How profound and deep!

But guess how many of those are actual quotes Magnus Carlsen said?

Literally not a single one of them.

Seriously, fact-check it.

So what happens when the next version scrapes the internet and finds this made-up information?

It’s like a paradox.

Someone asks ChatGPT to write an article about Magnus Carlsen.

ChatGPT writes an entire article, riddled with bullshit, the author just assumes the AI is correct and tosses it out on the internet.

A later version of AI trains on the dataset, written by an earlier version of itself, and instead of being fact-checked see that someone published these quotes which means it’s “true”.

And all of a sudden it’s as if all these quotes are fact.

Repeat this hundreds of millions of times over and over with all sorts of information, and all of a sudden it is impossible to distinguish what is real from what is made-up nonsense by a machine.

But who cares? The quotes kinda make Carlsen look smart

Yeah in this instance no one is really hurt. I mean, these quotes kinda just make Magnus Carlsen look like a profound chess philosopher.

So why care?

Because this is a harmless case.

It gets significantly more dangerous when people write health articles, engineering instructions, and safety manuals — just to name a couple.

“But people wouldn’t use Artificial Intelligence for that right? That would be dangerous!”

Well they do, that’s how the world works.

Greedy corporations who need to mass produce content for money will use AI in any way they can, which is why we’re seeing a poisoned internet where even when people do read an article they don’t even know if it’s real or not.

So what can I do?

I am happy you asked!

I always had an idea that this was going to happen, since the birth of OpenAI.

See I’m not just a hater screaming at ChatGPT from the sideline. I used it a lot when it first came out, which is when I realized how insanely dangerous it is.

I then started noticing that the articles I was reading online were clearly written by ChatGPT. And trust me, I am very familiar with what is AI-written. You can even read my guide on how to spot AI in the wild right here!

Regardless, that is why I created not only this blog – but these free NO-AI Icons that content creators can put on their websites to let all their readers know that everything on their websites is 100% human created.

Simply download the icon, put it on your site, and have it link to this statement.

We then go through the websites regularly to verify and boom, your readers can now for sure know that everything written is human.

This is important not only for the sake of stopping misinformation but for our mental health and future.

Why AI is Bad For Content

This influx of Artificially generated content from a machine that not only lacks empathy but is happy to make up the most random bullcrap without remorse or any moral compass is going to lead to our inevitable demise.

Do your part in stopping the AI epidemic.

Say NO to AI.

THIS ARTICLE WAS WRITTEN WITHOUT THE ASSISTANCE OF ARTIFICIAL INTELLIGENCE.